Enterprise AI Playgrounds?

Recently, I found myself looking into options for running local AI models which leveraged my home lab. The adoption of Ollama is heavily prevalent in many opensource options as an integration point. Jan continues to be developed and tweaked to support model parameter tuning with an incredibly straight forward GUI. As of late, leveraging Claude 3 Haiku has served the majority of my use cases from both a model and cost perspective. This whole ecosystem is sure to continue down a path to awesomeness and it's nice to see so many solutions leverage a simple API key in order to provide enterprise-level quality and integration experiences, which can be easily implemented with authentication providers.

Fabric had once again piqued my curiosity as an enthusiast. Daniel Miessler created the tool in order to apply the way he thinks about consuming content and context in order to optimize and improve his time spent. As professionals, we often find ourselves down rabbit holes studying irrelevant context or learning about things in the past. Meaning, it's critical to focus on what's of substance in order to stay ahead and leverage time effectively. Fabric is incredibly cool when paired with either a model provider or locally ran models via Ollama. The patterns allow you to simply make calls in order to cut down on noise. If you've ever found yourself on YouTube researching or learning something it's often a sink hole in terms of finding the correct content for substance. Fabric allows you to leverage the YouTube Data API along with the model/hosting option of your choice in order to parse transcripts and get to the point. There are functions that can break this down in multiple ways to ideally meet your learning style. This is great take on how to approach learning by incorporating prompt engineering functions built at the core.

As of late, I've been focusing on Lobe Chat. As we all know, responsible AI use is often very important to people who value their privacy. On the flip side, it's also a tool which can be leveraged to drive adoption and use cases within Enterprises which is quite enticing. Before you think I'm three sheets to the wind, let me try to justify why.

With the current cloud provider options, there's quite a bit of focus on the ease of setting up model playgrounds, which enable employees to start augmenting themselves by leveraging AI and ML. Sagemaker is an early example within AWS, which was built to provide ML functions to users by providing Jupyter playbooks to enable usage. We've seen the same within Bedrock as well, with a focus on AI Models which leverage RAG. The problem is often not the Playground, but the architecture required in order to properly provide access in a secure manner. This often increases cloud ops spend very quickly.

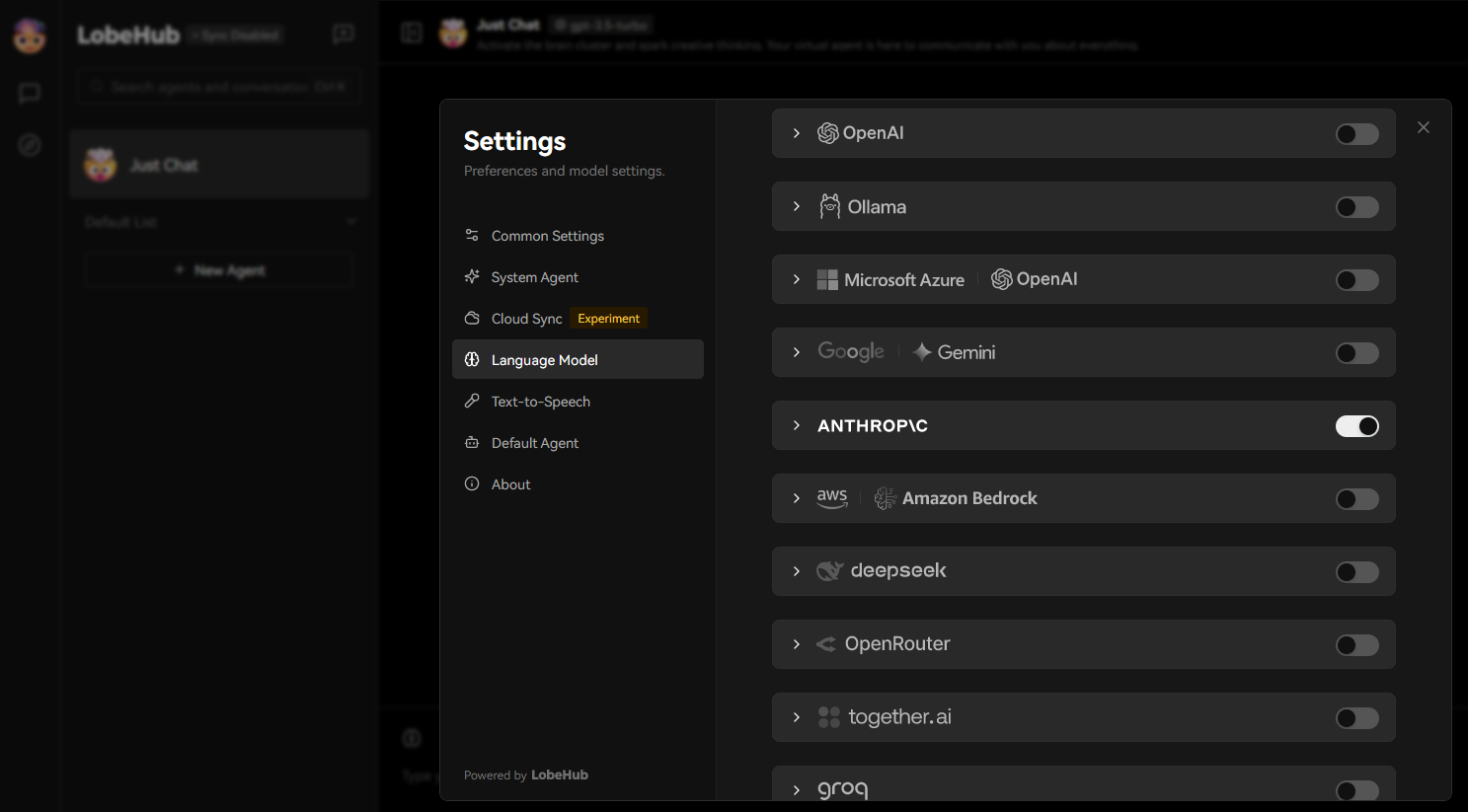

Lobe-chat enables not only an AI ready playground but also enables development for applications and sites using any model you can think of. The integration options for SSO already exist, and plugin support is prevalent. In terms of flexibility, it can serve as an application layer and leverage cloud provider options if that's your cup of tea. I ended up here as a result of seeing what's currently happening within the DoD in regards to enabling the usage of AI to optimize operations and efficiency. Below, I've included a screenshot of my self hosted LobeHub in order to show some of the model and API integrations you can potentially leverage at the time of this writing. If you're looking to restrict this to local only usage, Ollama is the clear answer.

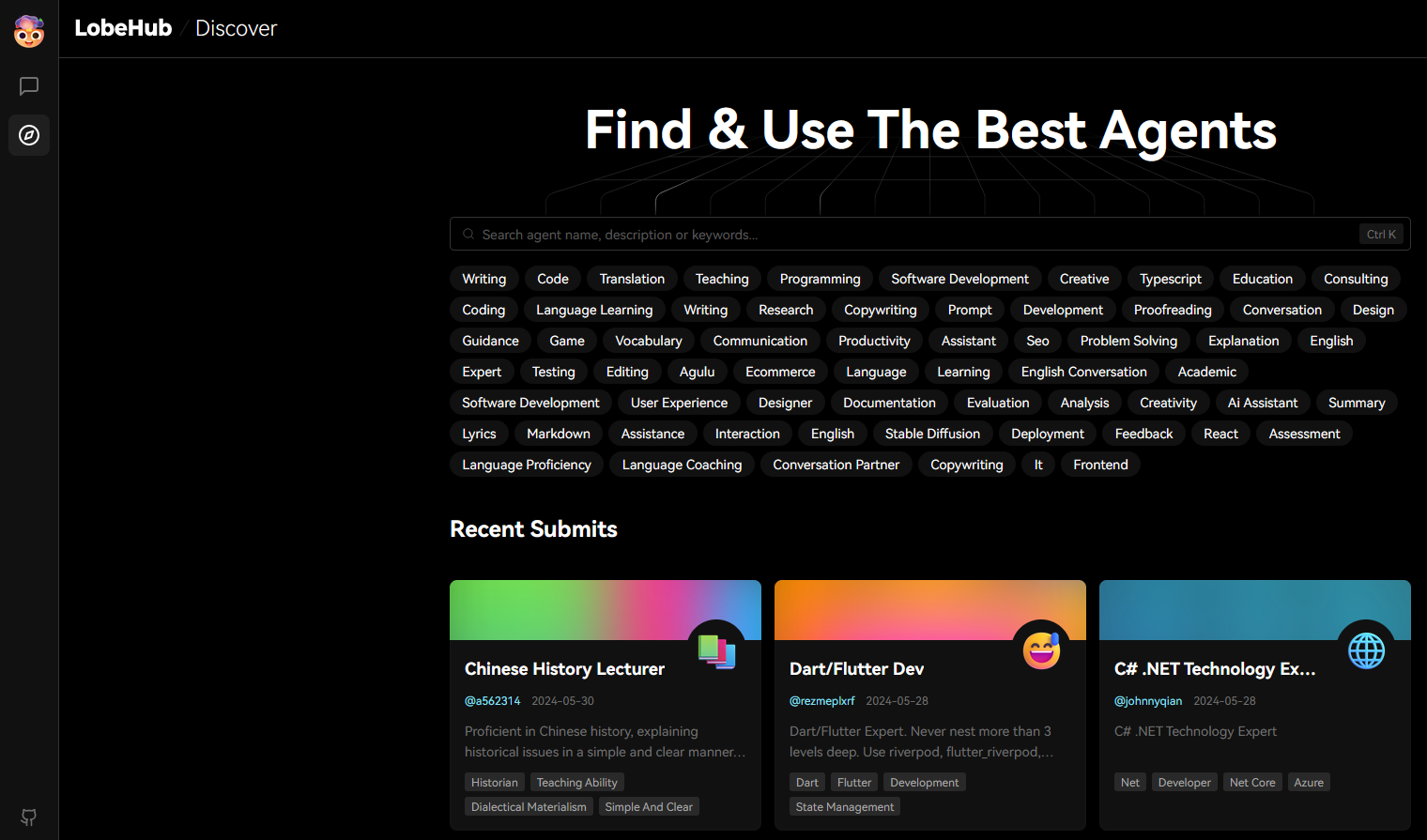

In terms of AI agent implementations you're able to leverage existing solutions in a plugin type manner. You're also able to train your own Agents to do your bidding should you feel the need to.

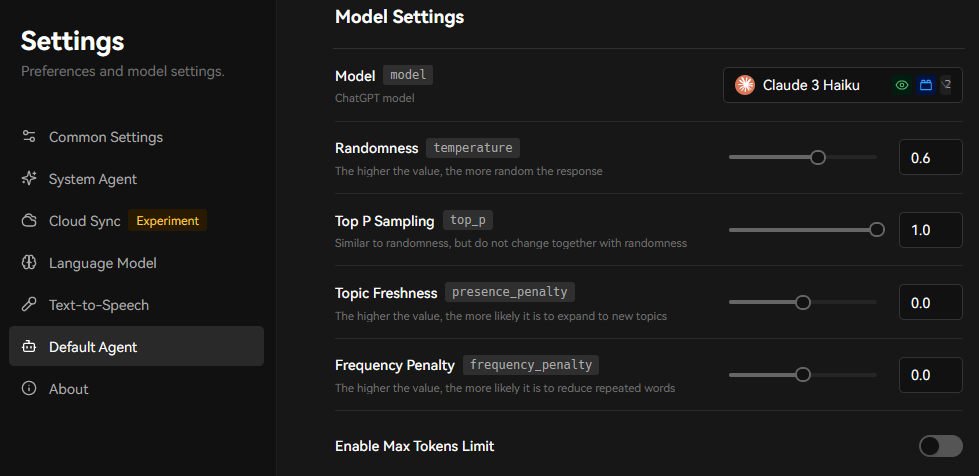

In terms of functions when working with models, you'll find the ability to upload images, voice conversations, image recognition, image/video generation and the ability to change model settings which are displayed below.

Spinning this up locally takes very little effort, all of about 2 minutes using docker compose. From there, you can login and start taking a look around to familiarize yourself with various UI functions. My main reason for writing this is to spread awareness and educate those from an outside in perspective. The self hosting documentation for Lobe Chat is located here. I hope you found your time spent reading this post useful.